It hides in plain sight—quietly diluting rankings, confusing search engines, and undermining otherwise great content. And in today’s AI-powered search ecosystem, duplication isn’t just an SEO issue—it’s a trust and clarity issue.

The problem? Most site owners don’t realize it’s happening until traffic stalls or rankings slip.

Let’s talk about how modern duplicate content checkers uncover these hidden issues—and why fixing them is one of the fastest ways to improve site performance.

What Duplicate Content Really Means Today

Duplicate content isn’t just copy-and-paste text anymore.

In modern websites, it often shows up as:

- Auto-generated pages from CMS templates

- Faceted navigation and filter URLs

- HTTP vs HTTPS or www vs non-www versions

- Parameterized URLs and tracking IDs

- Paginated and archive pages

- AI-generated content without proper differentiation

Search engines don’t penalize duplication by default—but they filter results. That means your best page might never be the one that ranks.

Why Duplicate Content Is Riskier in the AI Search Era

AI-driven search systems prioritize:

- Clear intent

- Unique value

- Semantic distinction

- Trusted sources

When multiple pages compete for the same query, AI systems struggle to identify the “best” answer. The result?

Lower visibility—or worse—your content being ignored entirely.

How Duplicate Content Checkers Help (Modern Edition)

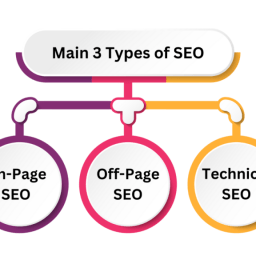

Today’s tools do far more than scan for identical paragraphs.

Modern SEO platforms like Semrush, Ahrefs, Screaming Frog, Sitebulb, and Google Search Console can:

- Identify near-duplicate pages

- Flag canonical conflicts

- Detect index bloat

- Highlight thin or auto-generated content

- Reveal crawl inefficiencies

This is where strategy meets data.

Hidden Site Issues Duplicate Content Checkers Uncover

1. Index Bloat

Too many similar pages = wasted crawl budget.

A duplicate checker helps identify pages that should be:

- Noindexed

- Consolidated

- Redirected

- Canonicalized

2. Poor Canonicalization

When search engines don’t know which version of a page is “the one,” rankings suffer.

Fixing canonical tags is one of the quickest technical SEO wins.

3. CMS and Plugin Conflicts

Many CMS platforms unintentionally create duplicate URLs.

Duplicate content tools expose:

- Tag and category overlaps

- Archive page duplication

- Plugin-generated content issues

4. AI Content Without Differentiation

AI-generated content isn’t the problem—undifferentiated AI content is.

Checkers help ensure:

- Pages add unique value

- Content isn’t repeating internal messaging

- Each page serves a distinct intent

5. Keyword Cannibalization

When multiple pages target the same keyword, they compete against each other.

Duplicate content analysis reveals:

- Overlapping keyword targets

- Pages that should be merged or repositioned

- Opportunities for clearer content hierarchy

How to Fix Duplicate Content (Without Nuking Your SEO)

Use canonical tags intentionally

Consolidate similar pages into stronger resources

Redirect outdated or redundant URLs

Differentiate AI-assisted content with human insight

Structure internal linking to support priority pages

Apply noindex where content adds no search value

At ONEWEBX, we treat duplicate content fixes as surgical—not destructive.

Duplicate Content Is a UX Problem Too

From a user perspective, duplication causes:

- Confusion

- Repetition

- Reduced trust

Fixing it improves:

- Navigation clarity

- Content relevance

- Conversion pathways

SEO wins follow naturally.

Why ONEWEBX Catches What Others Miss

Most agencies look for surface-level issues.

We look for patterns.

Our process combines:

- AI-assisted audits

- Technical SEO expertise

- UX and content strategy

- Privacy-aware analytics

That’s how we uncover hidden problems before they turn into ranking losses.