Let’s unpack the 8 most common duplicate content issues in 2026 and exactly how to fix them (the right way).

First: What Is Duplicate Content?

Duplicate content refers to substantial blocks of content that appear in more than one location — either on your website or across multiple websites.

Search engines like Google don’t typically “penalize” duplicate content outright. Instead, they:

- Choose which version to index

- Filter out duplicates

- Consolidate ranking signals

- Sometimes pick the wrong version

And that’s where the problem starts.

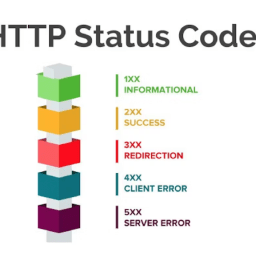

1. URL Variations (HTTP vs HTTPS, www vs non-www)

That’s four versions of the same content.

The Fix:

- Force HTTPS

- Choose a preferred domain version

- Implement 301 redirects

- Set canonical tags

Use tools like Google Search Console and Ahrefs to identify indexing inconsistencies.

2. E-commerce Product Variations

The Issue:

Product pages with different colors, sizes, or filters often generate multiple URLs with nearly identical content.

Example:

/product/shoes?color=red

/product/shoes?color=blue

The Fix:

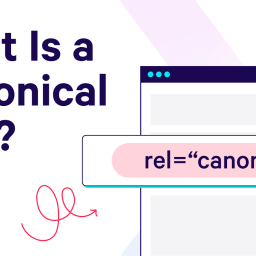

- Use canonical tags to point to the primary product page

- Consolidate variants where possible

- Optimize unique product descriptions

AI-generated product descriptions must still be original and customized. Generic AI text across dozens of products creates duplication fast.

3. Pagination Issues

The Issue:

Blog category pages or product listings create paginated URLs:

/blog?page=1

/blog?page=2

Without proper structure, search engines may treat these as duplicate or low-value pages.

The Fix:

- Use proper internal linking

- Optimize unique titles and meta descriptions

- Avoid thin paginated content

Strategic site architecture matters more than ever.

4. CMS-Generated Tag & Category Pages

The Issue:

Many CMS platforms automatically generate tag archives with minimal unique content.

These pages often:

- Compete with main category pages

- Provide little standalone value

- Create indexing clutter

The Fix:

- Noindex thin tag pages

- Add unique introductory copy

- Consolidate overlapping categories

Clean architecture improves crawl efficiency.

5. Copied or Syndicated Content

The Issue:

Republishing the same article across multiple sites without canonical attribution confuses search engines.

Guest posting? Fine.

Copy-pasting entire blog posts everywhere? Not fine.

The Fix:

- Use rel=canonical pointing to original source

- Rewrite and adapt content uniquely

- Avoid mass duplication

Search engines prioritize original authority.

6. AI-Generated Content at Scale

Let’s address the elephant in the room.

AI tools like ChatGPT can generate content quickly. But mass-producing lightly edited AI articles across similar topics creates semantic duplication.

Search engines now evaluate:

- Topical depth

- Original experience

- Semantic variation

- Unique insights

The Fix:

- Add human expertise

- Include original examples

- Avoid template-style structures

- Use AI as assistant, not autopilot

At ONEWEBX, we combine AI efficiency with editorial refinement. That’s the difference.

7. Printer-Friendly Versions

The Issue:

Some sites generate separate “print” URLs that duplicate full content.

The Fix:

- Block printer pages from indexing

- Use canonical tags

Small technical details matter.

8. Internal Content Cannibalization

The Issue:

Publishing multiple blog posts targeting the same keyword or topic without differentiation.

Example:

- “Best SEO Tips”

- “Top SEO Strategies”

- “SEO Techniques for Ranking”

If they overlap heavily, they compete against each other.

The Fix:

- Merge overlapping articles

- Create comprehensive pillar pages

- Use topic clustering strategy

Tools like SEMrush help identify keyword cannibalization.

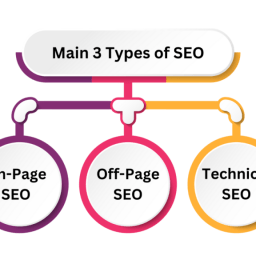

Why Duplicate Content Is More Complex in 2026

Search engines now use advanced AI models like Google BERT to understand semantic meaning — not just exact word matching.

That means:

- Slight rewrites won’t fool algorithms

- Low-value variations get filtered

- Thin content is ignored

Add in privacy-driven data shifts and AI-generated search summaries, and content clarity becomes even more critical.

Duplicate confusion reduces trust signals.

And trust is currency.

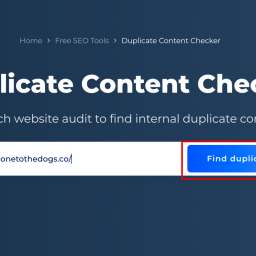

How to Audit Duplicate Content Properly

Here’s a streamlined process we use at ONEWEBX:

- Crawl the site with SEO audit tools

- Identify duplicate title tags and meta descriptions

- Review canonical tag implementation

- Analyze internal linking structure

- Review content overlap across topic clusters

- Check index coverage in Google Search Console

- Consolidate and restructure strategically

Technical SEO isn’t glamorous — but it wins long term.

The UX Factor Most People Ignore

Duplicate content isn’t just an SEO issue.

It’s a user experience issue.

When users encounter repetitive, thin, or confusing content structures:

- Bounce rates increase

- Engagement drops

- Conversions decline

Modern SEO is inseparable from UX design.